Interactive Example-based Hatching

Description:

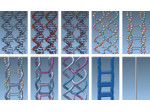

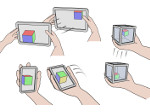

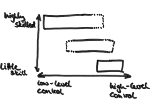

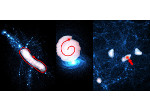

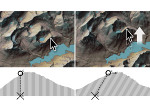

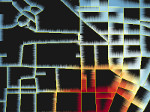

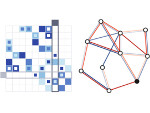

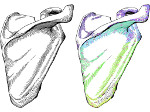

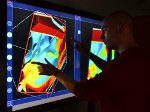

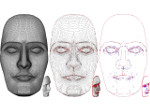

We present an approach for interactively generating pen-and-ink hatching renderings based on hand-drawn examples. We aim to overcome the regular and synthetic appearance of the results of existing methods by incorporating human virtuosity and illustration skills in the computer generation of such imagery. To achieve this goal, we propose to integrate an automatic style transfer with user interactions. This approach leverages the potential of example-based hatching while giving users the control and creative freedom to enhance the aesthetic appearance of the results. Using a scanned-in hatching illustration as input, we use image processing and machine learning methods to learn a model of the drawing style in the example illustration. We then apply this model to semi-automatically synthesize hatching illustrations of 3D meshes in the learned drawing style. In the learning stage, we first establish an analytical description of the hand-drawn example illustration using image processing. A 3D scene registered with the example drawing allows us to infer object-space information related to the 2D drawing elements. We employ a hierarchical style transfer model that captures drawing characteristics on four levels of abstraction, which are global, patch, stroke, and pixel levels. In the synthesis stage, an explicit representation of hatching strokes and hatching patches enables us to synthesize the learned hierarchical drawing characteristics. Our representation makes it possible to directly and intuitively interact with the hatching illustration. Amongst other interactions, users of our system can brush with patches of hatching strokes onto a 3D mesh. This interaction capability allows illustrators who are working with our system to make use of their artistic skills. Furthermore, the proposed interactions allow people without a background in hatching to interactively generate visually appealing hatching illustrations.

Paper download:  (18.0 MB)

(18.0 MB)

Video:

Get the video:

Pictures:

Additional Material:

- Slides from the presentation at Expressive 2013 (PDF, 4.9 MB)

Main Reference:

| Moritz Gerl and Tobias Isenberg (2013) Interactive Example-based Hatching. Computers & Graphics, 37(1–2):65–80, February–April 2013. | | ||

Other References:

| Moritz Gerl (2013) Explorations in Interactive Illustrative Rendering. PhD thesis, University of Groningen, The Netherlands, March 2013. | | ||

This work was done at the Scientific Visualization and Computer Graphics Lab of the University of Groningen, the Netherlands.