Interaction with Large Displays

With the resolution on today's interactive displays growing and multiple users concurrently interacting with them, it becomes increasingly difficult to maintain responsiveness of the system. I work on applying techniques from computer graphics to address the complexity issues on such interfaces. Specifically, I use the idea of image buffers to store information about the interactive environment.

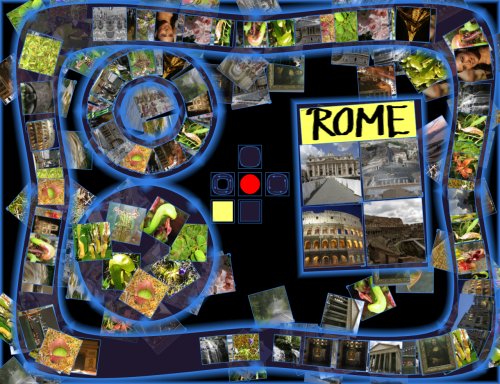

The concept of image buffers has previously been used, for example, to achieve hidden surface removal (z-buffer) and, in particular in non-photorealistic rendering for the creation of expressive renditions and to steer agents that, e.g., place strokes. Using such buffers to store properties of an interface, I have been able to regain interactivity and responsiveness on large displays and an increase in rendering speed of over a magnitude over previous implementations. The image below shows a screenshot of an application that was created based on this new approach and that can maintain high frame-rates while users are interacting with it.

Interactive multi-user tabletop application.

In addition, large displays both require but also offer the opportunity for entirely new interaction techniques because they are very different from the typical small monitor, keyboard, and mouse setup. There can be new input technologies such as direct touch, the displays may be horizontal instead of vertical, may offer different degrees of accuracy or of freedom of the input, and increasingly allow several people to interact simultaneously.

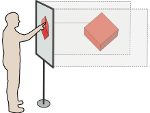

One example for such a novel setup allows for free-handed interaction in open space, as if one were interacting with both hands with a virtual vertical display. We use an inexpensive infrared capturing system based on the Wii Remote and passive markers and investigate techniques for robust pairing and pinching detection in order to identify which point belongs to which hand.

Presenting using Two-Handed Interaction in Open Space.

Selected Demos, Videos, and Projects: