Class Organization

Lab Sessions

Project Gallery

Skittlecamby Marijn Hofstra and Alex Hamelink Wii-controllers and mobile phones already allow a user to interface with a computer using orientation and motion. We attempt to take an alternative route by handing the user a predetermined physical object. By analyzing the object with a fixed camera, we allow the user to tilt and rotate the object and translate these movements to an object on the screen. Possible applications we can think of are

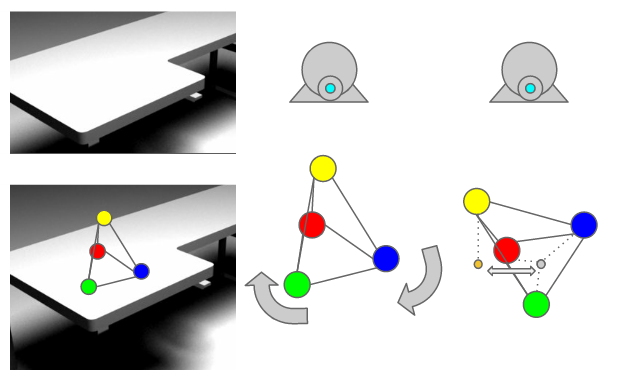

The way in which this project differs most is in not using a device with a set of gyroscopes as our physical object (i.e., some kind of Wii controller). Project setup A laptop, a 720p HD camera and a unilateral tetrahedron consisting of four colored spheres on each of the corners. We used wooden sticks, styrofoam spheres, some glue and four colored markers for the tetrahedron. Proper lighting is required, preferably daylight by placing the camera near a window pointing away from the window. We used the Processing toolkit along with the JMyron and OBJLoader plugins for Processing. Although we do not use the JMyron detection features, we do use its core image processing structure for accessing the camera pixel data efficiently. The OBJLoader plugin is used to load a 3D model that is displayed in a OpenGL window. We start the application, allow it to capture a few frames for the initial background model and introduce the physical object after this is completed. The object on the screen is rotated and/or tilted whenever the camera detects all four colored corners of the physical object.  Image detection We implemented a statistical background detection algorithm to separate foreground and background pixels. The foreground pixels that remain are then classified:

For each of the four sphere colors, clusters of pixels that are too small, too large or too sparse are eliminated. We then pick the largest remaining blobs per color. If we found a blob of each color, we designate the center coordinates of each blob/sphere as one of the four tethrahedron corners and send the coordinates to the display process (which will use a simple averaging mechanism to remove jitter and erratic motion). The yellow sphere is used for tilt (top of the tetrahedron), the other three spheres represent the base of the tetrahedron and are used for rotation. The clockwise orientation of the three base spheres tell us whether the yellow sphere is "in front" or "behind" the base plane (relative to the camera). By using known properties of unilateral tetrahedra, we convert these 2D coordinates to 3D and subsequently calculate the resulting 3D orientation of the object on the screen. Performance, accuracy and usability We used a cheap camera, an asus eee 901 laptop, a non-accurate tetrahedron and styrofoam spheres colored non-uniformly with a marker. Proper lighting conditions resulted in decent accuracy, tweaking the parameters was needed when using artifical lighting. The detection framerate was about 6-10 frames per second at 640x480, which we found was small enough to keep things "interactive". Demo The demo is split into two parts due to technical issues displaying both visual feeds at the same time. The second demo was recorded shortly after the first demo, using the same setup and settings. Demo 1: This shows the detection part. At the start, only the detected sphere pixels are shown. After a while, all the foreground pixels are shown (including shadow pixels). Shortly after, some lighting condition changes, causing alot of background pixels to be detected as shadow pixels.

Demo 2: This shows the 3D object that rotates and tilts based on detected sphere coordinates. Note that the movements are not based on the first demo; this is a second recording.

Sources We used the article http://dx.doi.org/10.1109/ICISA.2010.5480276 for inspiration on separating background, foreground and shadow pixels. |

| Recent Changes (All) | Edit SideBar | Page last modified on June 11, 2012, at 12:32 PM | Edit Page | Page History |

| Powered by PmWiki | ||