Class Organization

Lab Sessions

Project Gallery

|

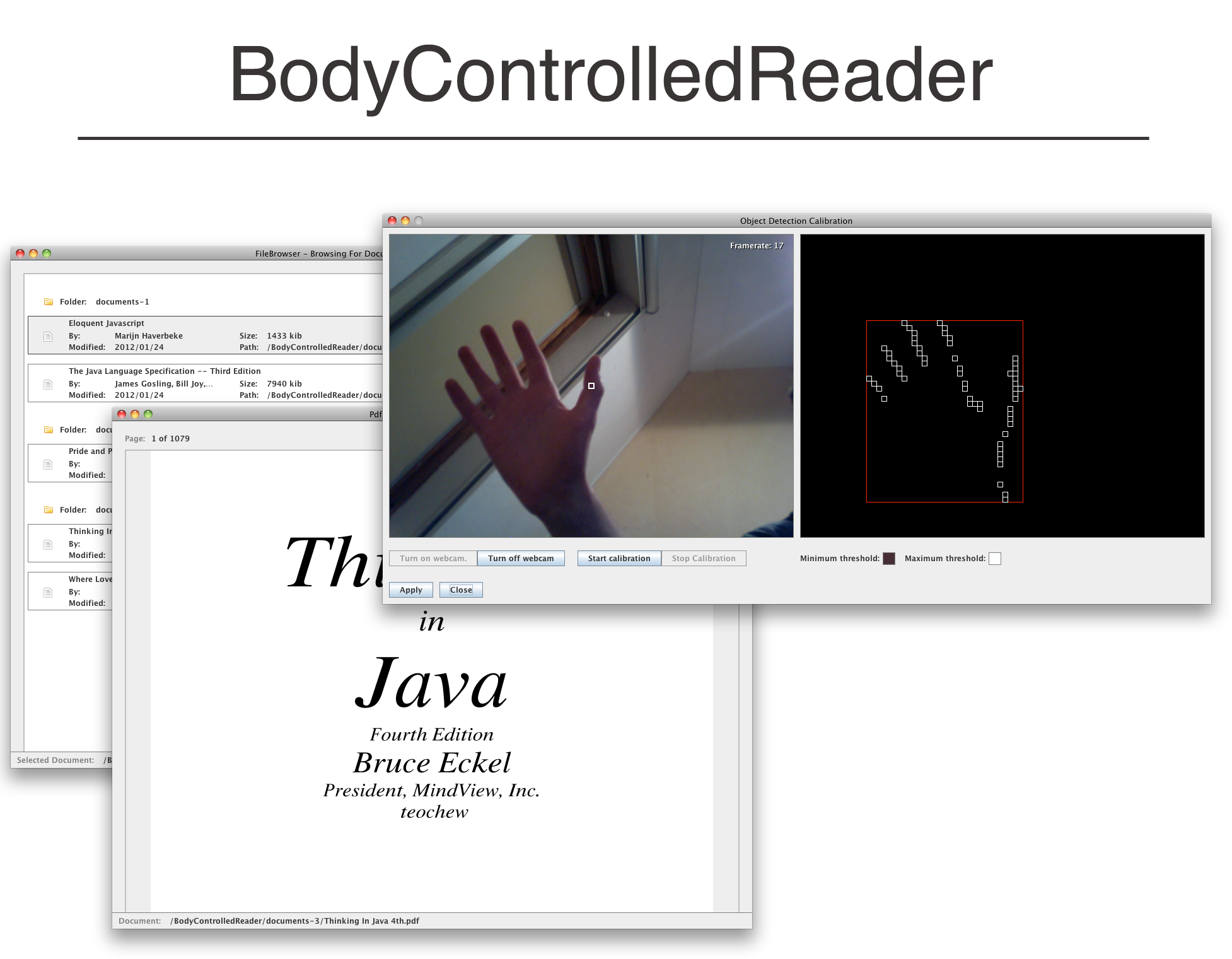

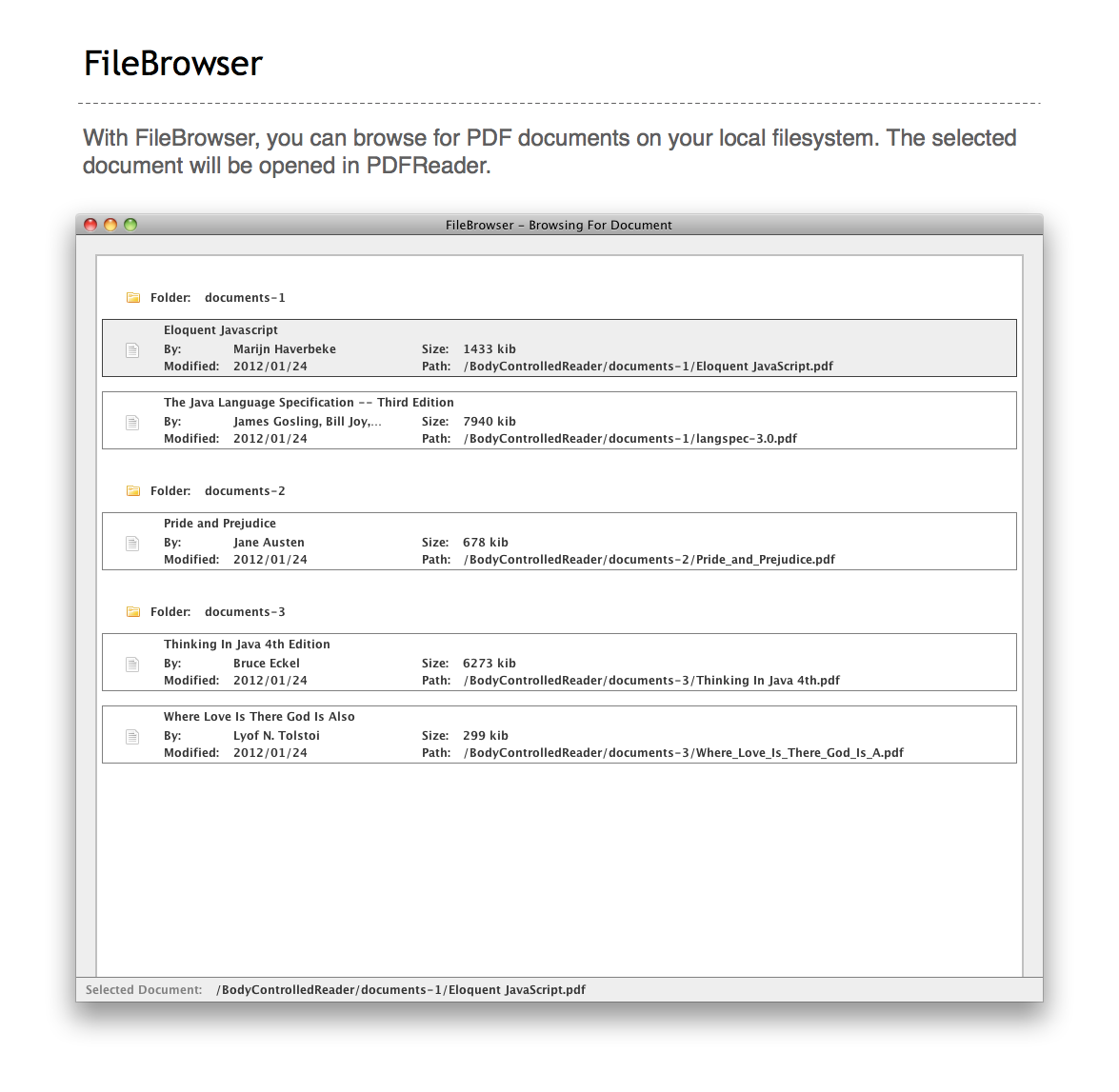

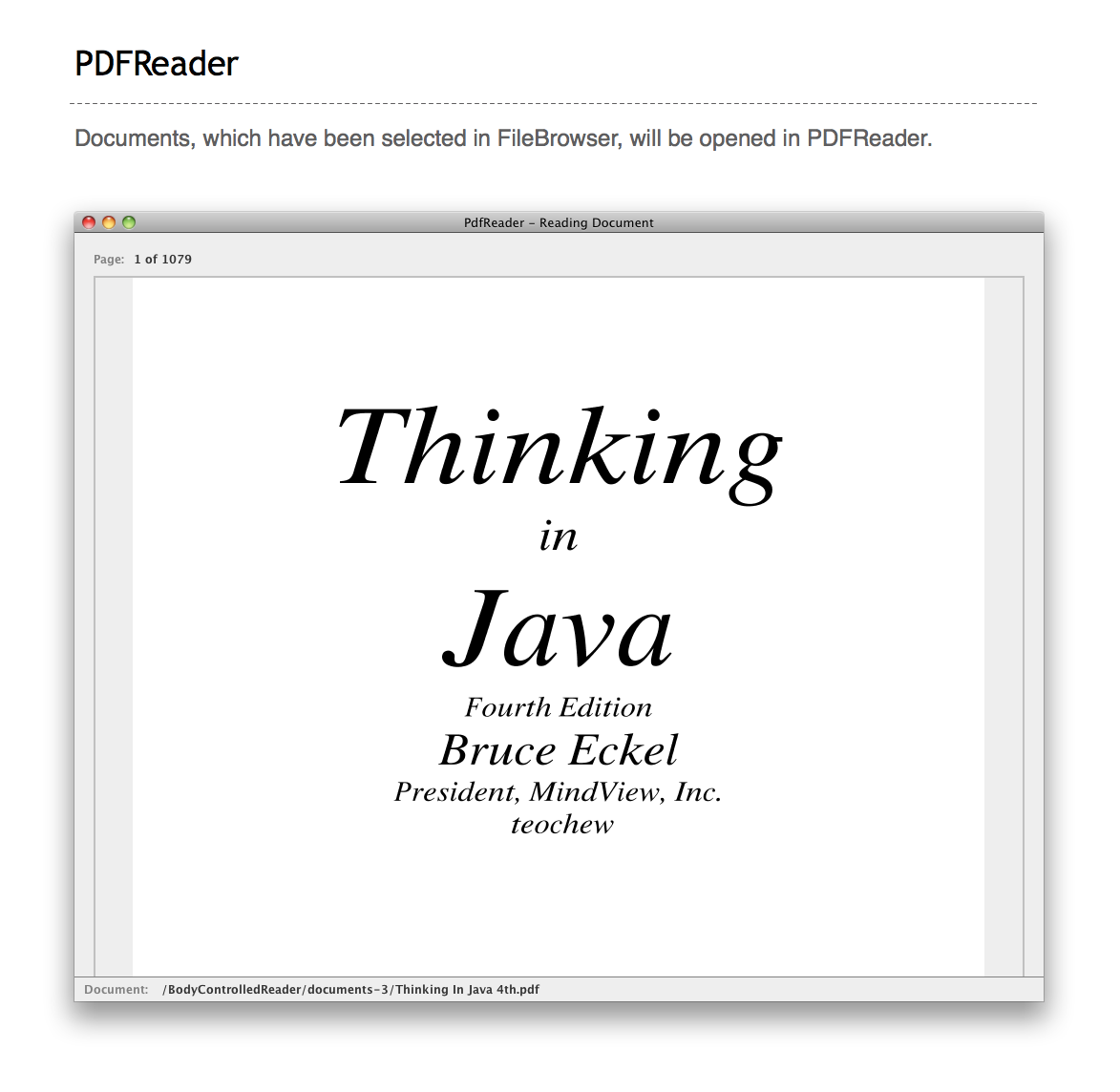

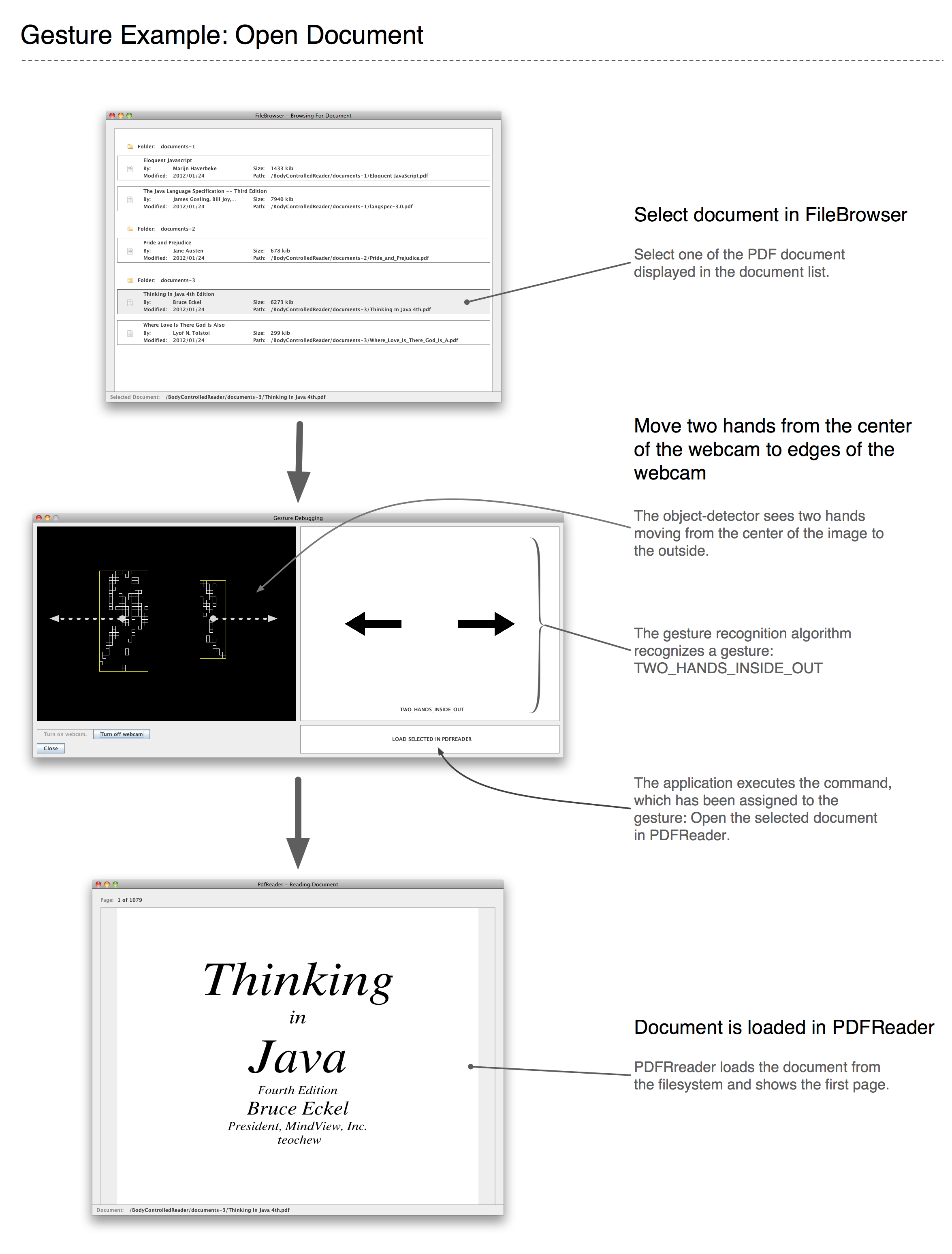

by Anonymous* and Jens van der Meer What is BodyControlledReader? The goal of the BodyControlledReader project is to create a PDF reading application, which is controlled by tracking the gestures made by hands**. The gestures are detected via a webcam. A secondary goal of the project is to assess the practicality of reading PDF documents, while controlling the document via gestures. The final program is programmed in Java and uses the free OpenCV library for capturing images from a webcam. The PDFRenderer library was chosen for displaying the pages of PDF documents. The algorithms for detecting the hands and recognizing gestures are custom made for this project. The Java programming language was chosen for its cross platform abilities. Hence BodyControlledReader is able to run on Windows and Mac OS X. How does BodyControlledReader work? With BodyControlledReader one can:

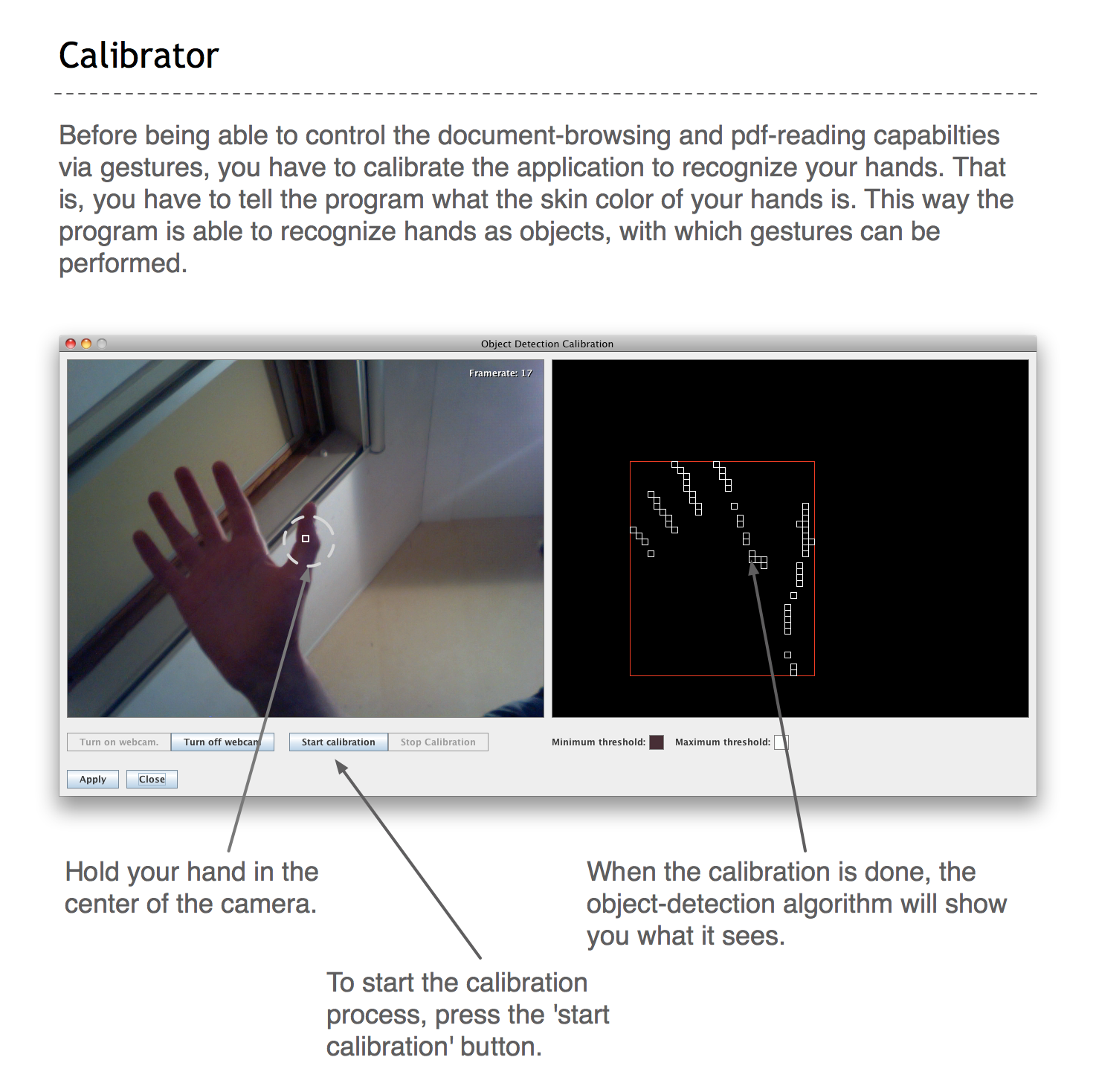

When using the application, the user moves his or her hands in front of the webcam. The application recognizes a gesture (e.g., a one-hand movement from the left side of the webcam to the right side) and executes a command (e.g., move to the next page of a document). Before being able to control the document-browsing and pdf-reading capabilities via gestures, the user has to calibrate*** the application to recognize their hands. That is, the user has to tell the program what the skin color of their hands is. This way the program is able to recognize hands as objects, with which gestures can be performed. To make the calibration process easy as possible, BodyControlledReader has a built-in calibrator application. How usable is BodyControlledReader? By doing gestures, one can control the program quite well. Meaning that, the program misinterprets just a few gestures (approximately 10 percent of all gestures performed). The accuracy of the gesture detection, however, depends largely on the user's experience and the calibration of the hand-detection. One of the downsides, however, of controlling an application with gestures is, that the gestures have to be quite large. When performing lots of gestures over a long period of time, the user's arms will become tired. From a technical perspective BodyControlledReader is certainly usable. That is, the program is able to recognize gestures and interpret them into actions. From a practical standpoint, reading documents and navigating the document through gestures, is probably not an optimal combination; after intensive use, the users' arms will become tired. Therefore it is probably more practical to use the arrow keys on a keyboard for document navigation. However, when a keyboard is not available, there are some applications for gesture-controlled programs or devices. For example, when one is watching television: With this program you should be able to turn up or down the volume, or to go to the next channel, without having to find the remote control. Footnotes * One student asked to remain anonymous. ** Please note that the project name references the entirety of the human body (*Body*ControlledReader) instead of the just the hands. When the project name was chosen, it was not known which body part would be used to make gestures. Alternatives considered were eye-tracking, head-tracking and hand-tracking (gestures). Hand-tracking was chosen for its intuitiveness and relative ease of implementation. *** Calibration is performed to reduce the impact of changing lighting conditions. Rapidly changing lighting conditions are caused by the webcam automatically adjusting to the lighting conditions of the environment. By telling the application manually what the skin color of one's hands is, the hand-detection algorithm has an easier job of separating the foreground in the webcams image (the users' hands) from the background. |

| Recent Changes (All) | Edit SideBar | Page last modified on March 06, 2013, at 12:46 PM | Edit Page | Page History |

| Powered by PmWiki | ||